VR Teamspace

Meetings when you can’t meet

5 years in development

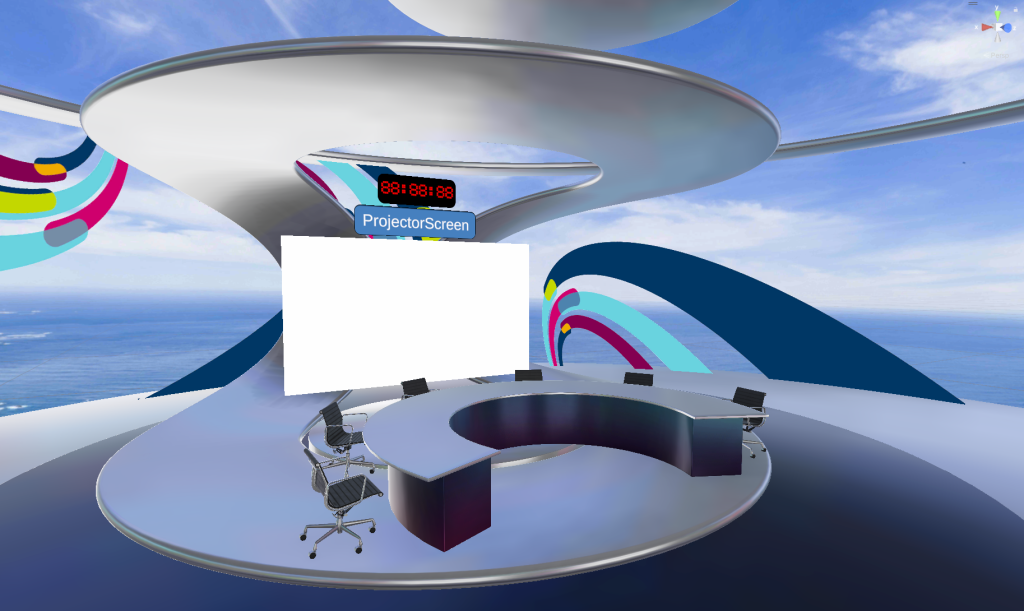

Brief

Create a VR meeting app for doctors and medical consultants around the world to meet and collaborate face to face during the global pandemic.

Team

1 Unity developer, 1 back-end server side developer.

Challenges:

Hardware:

VR hardware is rapidly evolving. We initially developed the app. for the oculus quest and ported the application onto several other platforms such as the Pico 3 and 4, various Vive headsets as well as also having a desktop PC version. This required having a different development branch for each platform to incorporate the various sdk’s and the changes in our code due to the different platforms.

Solution:

We changed from using custom sdks and code to developing with the cross platform package OpenXR which has advanced to incorporate more features in recent years such as hand tracking, for controller-free mode. Thus we were able to streamline the application and development process and simultaneously support any headset that had a supporting OpenXR plugin.

3D Avatars

Requirements

- Low poly to allow for room sizes of up to 15 people simultaneously we found a maximum of 50k polygons per avatar to be the ‘sweet spot’

- Have blendshapes for realistic mouth and facial movements,

- Be rigged to allow for natural body movements.

- Full body avatars to reinforce the immersive feel at meetings,

- Compatible with Unity’s Humanoid System,

- Photo realistic avatars that are quick to create from one passport style photo and be instantly recognisable.

Solutions

We created a prefab rig that could be easily customised to support multiple avatar solutions as long as they supported Unity’s Humanoid system. Avaturn, AvatarSDK, Character Creator, Didimo, Unity’s UMA, we could all support. We could even use the different avatar types within the same scene in the same app.

Syncronisation:

Realtime sync of voice, face and body movements as well as animated objects that also move in sync simultaneously on all headsets, be ‘picked-up’ and passed around changing ‘ownership’ of the objects.

Solution:

We found Photon PUN and Photon Voice to be the simplest and cheapest solution. Voice proved to be easy to implement, with virtually no lag even between locations in Asia, EU and North America. PUN has prebuilt components for Transform and Animation where as other synchronisation requirements were met using custom components to serialise properties and events.

Motion sickness:

The curse of VR is motion sickness. Like any motion sickness it varies in degrees of severity on the person involved. For Teamspace to work, all participants had to be on-board with using our technology, if even one of the users felt at all nauseous they were likely to ‘drop-out’ of the meetings and the meetings themselves would lose any value.

Solution:

To keep motion sickeness to a minimum, avatars were either in a seated or standing position and only able to change location with a teleport function. Also, frame rates of less than 50-60fps we found could also induce motion sickness especially when the head was turned quickly from side to side causing frame drops. The environments had to be low poly with baked and mixed lighting using Unity’s URP lighting pipeline. We were expecting room sizes to contain a maximum of 15 avatars so we found a 50,000 polycount limit per avatar to be key.

Dynamic Menu

To cater for many different meeting types and customer requirements we wanted a menu system that could be customisable ‘on the fly’ without having to rebuild and deploy the app each time.

Solution:

The solution was to create an event based menu system. A SQL database created a configuration JSON file which was downloaded at runtime from a web server. This file contained, amongst other things, the menu set up. Listeners on the UI instantiate a UI component prefab to populate the canvas adding buttons, click events, image sprites, text and custom colours.

Holopresenter

Brief

Create an AR app for pharmaceutical sales reps to engage with potential clients at trade shows and congresses, to sell their products.

1 year in development

Team

1 Unity developer, 1 back-end server side developer.

Challenges:

Hardware

The ageing HoloLens was our original target for the project, indeed we had used it in the past for a similar project. The HoloLens 2 was considered, however the specification of the much awaited new headset was a big disappointment in terms of performance. We needed something capable of running Evercoast 3D volumetric videos and large 3D animations. Also we were going to need around 30-50 headsets and HoloLens was difficult to get in those numbers.

Solution:

Magic Leap 2 had been launched a few months prior and we worked closely with Magic Leap and Evercoast throughout the development period as much of what we were doing was cutting edge. We had several Teams meetings with their development team from around the globe, highlighting our problems. One issue we discovered early on was the Evercoast plugin was not compiled for the target architecture of the Magic Leap which is x-86/64 bit and the Evercoast plugin was currently only built for ARM.

Synchronisation.

To allow the sales reps to control and synchronise the content of the headsets with no internet access. Typically for these large events you cannot rely on a stable and fast internet access.

Solution:

We used a router to create our own wi-fi Lan and and running a node server on a laptop we could sync the events on the headsets using javascript commands sent though a HTML browser on an iPad.

Marker tracking

As well as keeping the AR presentations on several headsets in-sync, another issue was to ensure that all the users are looking at the same location as if they are all standing around the same object in space.

Solution:

The Magic Leap supports marker tracking using Aruco QR codes. These were incorporated into the display so that when the headset detects it, this real world location can be used to present the virtual 3D content.

Motion Sickness

Although not as much of an problem with augmented reality as with VR, it can still be an issue. Our target frame rates were 60fps and load times of instantiated objects to be kept to 3 seconds to ensure the smooth running of the presentations. Many of the models were abstract, complex, with high poly counts and with large numbers of keyframes and textures. They were typically created using various different modelling packages.

Solution:

Models were to be optimised in the native software wherever possible. Technologies such as Pixyz Studio offer mixed results and often create other issues. GLTF format should be avoided as Unity import settings to optimise mesh and animation frames are inferior to the ones with fbx file format. Textures should not be embedded as this only increases file size and so affect load times. We specified a maximum of 2 textures (1 albedo 1 normal) max size 1024×1024 per object. This can be achieved by UVW wrapping of the model in the 3D modelling software.

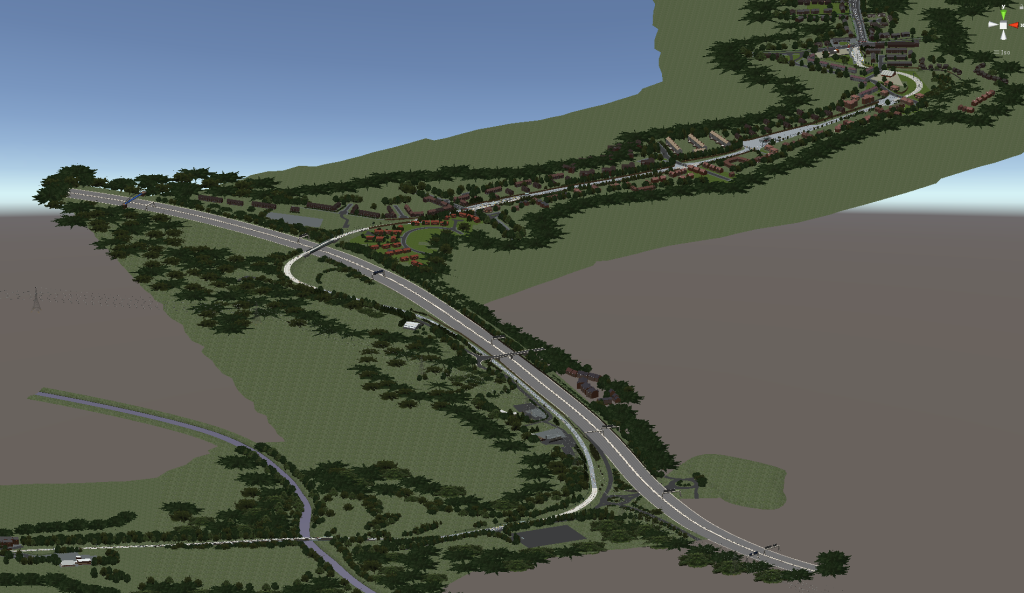

Tram Pro

5 years in development

Brief

Create a light rail desktop simulator to provide tram drivers with the route knowledge for all new and existing tram routes in Manchester and Nottingham. Also to provide simulation for event scenarios such as signal/points failure, and SPAS light triggering (flashing blue lights at intervals along the track that trigger in the event of a tram going through a stop signal or over speeding).

Team

Team- 1 Unity dev;

1 Unity dev/environmental Artist/3D modeller and technical artist

1 environmental artist/3D modeller

1 human factors/Sales director.

Challenges:

Modelling:

The tram routes were many miles long and much of them were in various stages of construction so using videos or Google street view style photography could not be used to proved the drivers with the visual clues to enable them to recognise the routes.

Solution:

The whole environment had to be modelled in 3D using 2D OS map data, orthographic aerial photographs of the terrain, 3D splines of the track alignment. Site photographic surveys gained from walking along the track in both directions accompanied by rail staff where the lines were ‘live’ and construction crew on the routes where the rail was still a construction site.

Simulate the feel and movement of a light rail tram

Solution.

To give a real world experience we used Unity’s physics engine combined with a capsule collider and modelled a toboggan / bobsleigh style system to simulate movement along the rails.By controlling movement using forces, gravity and friction materials gave a real world feel of locomotion which is at times bumpy, especially when going over points.